Double Your App Conversion Rate with App A/B Testing. Here’s How.

App Store Optimization (ASO)

December 20, 2018

This is a guest post on A/B testing by Alexandra Lamachenka from Splitmetrics

Do you want to dramatically increase the number of app installs you’re getting?

When you think about strategies that would allow you to do this, you usually think about how you can steer more people to your app page. But is that actually all that you need?

Each app page has its own conversion rate which means not all people who land on the app page will actually convert into users and customers.

An app conversion rate is key to understanding whether the app store page elements increase incoming traffic and convince people to install the app. Traditional thinking says: If your app conversion rate is low (compared to an average 26% app conversion), you start optimizing various elements and continue until you see you’re doubling installs while user acquisition efforts remain unchanged.

Sounds terrific, right? One of the challenges is to identify those specific elements that influence app conversion rates. And the tip here is: stop guessing.

You never know whether a new set of screenshots is going to boost or ruin your app conversion rate. The risk-free way to find influential elements and develop winning combinations is to split test.

What is app A/B testing?

In essence, A/B testing is a method of comparing two options and choosing the one that gives the best results. The logic behind it is simple:

Your opinion is subjective and the best way to figure out how your audience interacts with a specific element is to actually observe and quantifiably measure it.

To do so, you split an audience into equal groups and plant them on different variants of one element. Each group represents the whole audience and behaves like an average user would. As a result, you clearly see whether a user leans towards the 1st, 2nd or 3rd (in a case of multivariate testing) option and can apply the winner to improve the overall element performance.

Marketers often associate A/B tests with web pages. I subscribe to the notion that A/B tests can be utilized for any element:

- Which name promotes your product most effectively?

- Which CTA (Call To Action) gets clicks?

- Which screenshots drive app store installs?

To answer these questions, you test every step – from acquisition to conversion to purchase – and optimize its elements.

For app developers, the key decision users make involves whether or not they download the app when they land on the app store page. Optimizing all elements can positively influence that decision.

What app store page elements to test?

App A/B testing is primarily used to increase the app conversion rate optimization in the following situations:

- Finding: Search and Search Ads

- Comparing with competitors: Category

- Deciding on an install: App Store Page

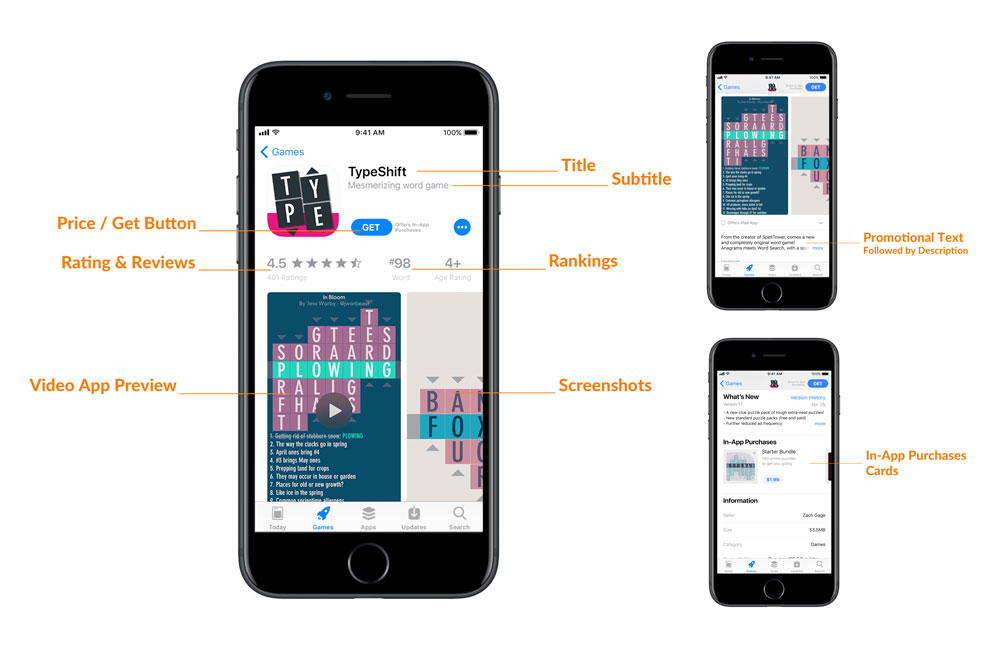

The good news for mobile marketers is that unlike a web page where a few dozen elements can influence a conversion, on the app store a user makes a decision based on only 8 of them: title, icon, screenshots, description, video, rating and reviews, price and in-app purchases.

With a recent App Store redesign, a product subtitle and promotional text are added to this list.

Let’s take a closer look at the 5 current elements that have the strongest influence on app page performance.

1. Title

Even though title optimization alone will not have any significant influence on the app’s conversion rate, adding keywords to a title will improve search rankings and attract more organic users.

In addition, since a title is the first thing users see when they browse a category or search, it’s one of two elements you can use to distinguish a product from competitors.

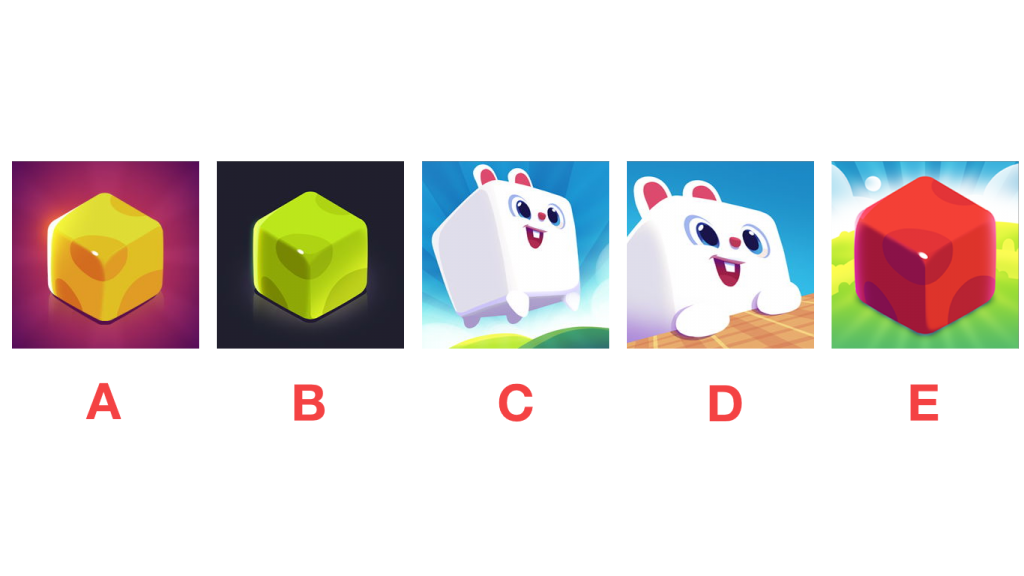

2. Icon

Just like a title, an icon is the first element people see when they browse the app store or compare your product with competitors. With so many options, it has to stand out, speak to users, and connect with them.

When it comes to an icon, a marketer usually tests how graphics, simplicity, colors, characters and a logo influence the app’s conversion rate and user engagement.

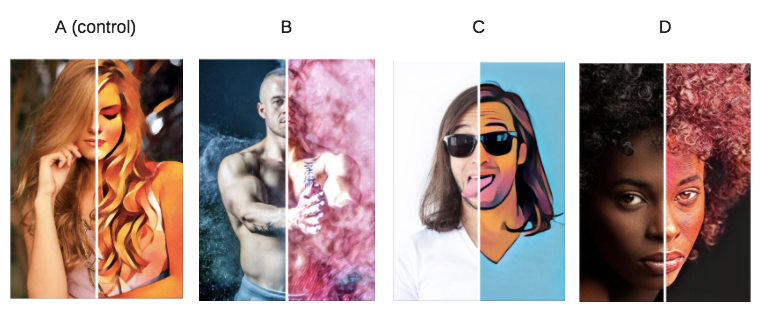

3. Screenshots

Screenshots are absolute deal-makers or deal-breakers for search, search ads and an app page. In most cases, screenshots are the only source of information people use to make their decision. To be competitive, screenshots have to not only accurately reflect the product but engage the potential user.

A mix of actual app screenshots, captions, experiences, localizations and orientations are tools that app developers use to improve communication with potential users.

To improve communication with potential users, app developers usually start by changing the order of the screenshots and analyzing which visuals and captions work best. Testing and seeing which of the features are most appealing to users helps with showcasing experiences – not just promo images.

Surprisingly, screenshots orientation (landscape or portrait) can also affect potential users’ interest in an application as it is strongly connected to behavioral habits. For instance, tower defense gamers are most likely to react positvely on landscape screenshots whilst portrait screenshots engage photographers.

4. Description and Promo Text.

On the App Store (on mobile), people only see the first 167 characters of a description that app developers will be adding to the promotional text since the App Store redesign. Therefore, only the first few lines actually influence the conversion rate for an app page.

To make these descriptions resonate with their audience, marketers play around with the call to action, social proof, features, length and localizations.

5. Video

The first thing users currently see after the icon is the a short video called App Preview that autoplays.

From our A/B testing experience, depending on the app and how they are done promo videos can either boost downloads or decrease them. The “poster frame” acts as the first screenshot, and it is therefore critical to optimize it to avoid that this visual performs less than what would otherwise be the first screenshot.

As prices on high-quality videos can be rather high, some marketers prefer to A/B test initial scenarios before finishing the video and uploading it to the app store. App Previews (up to 3 are available) now have a bigger impact (since iOS 11), as they autoplay both on the App Store page and in the search results, making a listing with a video quite attractive to the eye (especially for landscape apps). The App Preview poster frame is somewhat less critical because of the autoplayed video, however it still appears in some cases (India, China, and when Apple featured an app – because of the promo graphic, no autoplay before scrolling down a bit).

Will the App Store redesign switch testing priorities?

Since an update switches a focus from an app vision to a product side, another element that will be worth testing is in-app purchases cards.

Each in-app purchase will be presented in a form of a card with an icon, title, short description and a price. Users are still sensitive to in-app purchases and these items may ultimately have a stronger impact on the conversion rate than – let me say – a description.

As Apple cuts titles to 30 characters, a subtitle starts playing an important role. Should it describe a product, feature a killer CTA or name key benefits? Even though it is hard to determine a winning strategy right now, it is clear that this element will need a thoughtful optimization.

Coming up with a list of tests

Having a clear structure and defining specific goals is key to the success of app A/B testing.

Random tests do not allow marketers to understand the real problem behind an element’s low performance. Extrapolating results from such random tests does not provide a full picture. Changing a button color and getting +10% on a conversion rate won’t answer the question of why users preferred the new color. Questions still remain- But what if it was conditioned by hidden habits? Would a new color skyrocket CTR if you implement it in the logo?

You never know until you test it.

Every successful test starts with a mature hypothesis that is usually based on customer surveys and competitor research. What’s even more important, it correlates with an overall marketing strategy: paid traffic, organic, e-commerce, etc.

Every successful A/B test starts with a mature hypothesis and research and considers a user acquisition strategy.

Don’t think about A/B tests only in terms of app store page elements but also in terms of optimization at each step. And these steps will vary depending on which channels an app developer uses.

For example, when users find an app through paid ads, they start their journey on the app store page where screenshots play the greatest role. When coming from organic traffic, most of the user decision is made by looking at the icon and the title.

Once you’ve determined for which user acquisition channels you’ll A/B test, the next step for an app developer is to set clear goals. These can be:

- To evaluate a product. Pre-launch testing allows for developing an initial vision and position. In the case of pre-launch testing, a marketer uses page elements to A/B test messages and determines ones that resonate with users.

- To increase an app conversion rate. A boost can be achieved by adding videos, changing icons or screenshots, adding CTA and social proof to a description, localizations, etc. A marketer tests hypotheses that can bring more clients but are expensive and time-consuming in development and implementation.

- To validate a new traffic channel. With A/B testing, a user acquisition manager sees how people stemming from new traffic channels interact with content and what elements engage them the most. Focusing on these elements will allow you to increase the conversion rate while reducing user acquisition costs.

- To validate product positioning. The product does not change but positioning does. Before repositioning a product, a marketer will want to understand whether users actually receive and understand the new message.

- To validate different audience segments. With app A/B testing, you can run experiments to analyze not only page elements but an audience. In this case, testing tells you how well you connect with your target audience and helps you to optimize a page’s message.

Okay, so what do we have so far?

- Users and competitors are analyzed.

- User acquisition channels and the app store page elements people interact with are determined.

- Clear goals are set…

Now what?

The last step is to identify those app store page elements that hold back the conversion rate and user growth.

One of the most in-depth resources on finding these “weak points” comes from this ASO checklist. Keeping goals, channels and researches in mind, you perform an app page audit and come up with a list of elements that require optimization.

A straightforward app A/B testing

I would say that A/B testing is a structured process that can be illustrated as a cycle. You start with one element and then repeat repeat the cycle again and again. Each curl of the spiral will shed new light on how a goal can be achieved.

App A/B testing is not linear – it is always an ongoing process.

Therefore, an app A/B testing workflow – a spiral curl – goes like this:

- Research and brainstorming – In this step, you define what you want to test and what your final goals are.

- Determine your variants – Once you have formulated your hypothesis and identified the elements you’ll be testing, you create variations.

- Run the test – In this step, you identify and drive your audience to two variations of one page.

- Analyze data and review results – The coolest part: you determine the winner! Here you look at how people interact with different pages and, ultimately, whether they press “Install”.

- Apply the winner – If you do have a clear winner, you can either implement changes or use it as a starting point for following tests.

- Run follow-up tests – Conversion optimization is an ongoing process and one test can hardly change anything. Continue running tests even though you’re already happy with the results. There’s always room for growth and improvement.

The smoothest scenario is when you get significant results on the first try. However, you may face a situation where new variants show little or no difference in conversion rates. There are always specific reasons for it, including: miniscule changes, poor research, ill-defined or incorrect goals.

Selecting an app A/B testing tool

Once you have decided to start conducting app A/B testing, the next step is to choose a tool for this job.

If you want a free basic tool and have only an Android app to test, go with Google Play Store Listing Experiments. One disadvantage of this method is that behavioral patterns of iOS users can significantly differ from those of Android users. Another downside of Google Play Experiments is that you can not launch several tests at once or work with apps that haven’t been released.

Some app developers perform A/B tests by uploading changes sequentially and measuring results. This method violates the basic principle of A/B testing that requires simultaneous experiments. And let’s face the truth: marketers running tests on a live product run the risk of ruining the conversion rate and losing clients.

Another method I personally used four years ago for optimizing apps’ creatives is testing them through banners on social media. It gives insights on what banners and messages people like but that’s mostly it. Because a banner stands alone this method does not consider an app’s environment (the app store page). Therefore, the results are usually controversial and you should double test them on the app store.

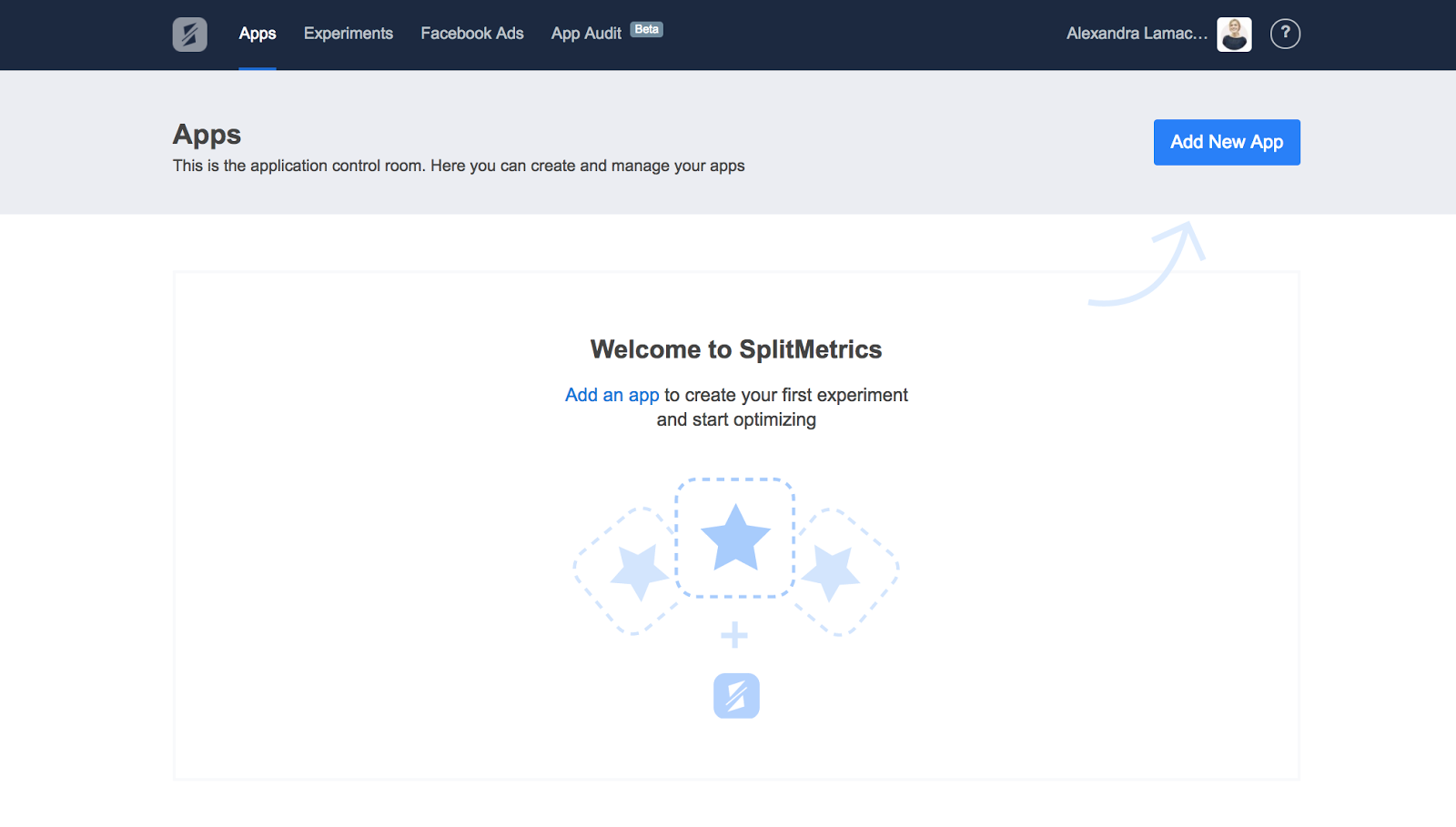

A solid alternative that gives representative results is to go with a third party tool like SplitMetrics. These tools allow you to test both iOS and Android apps, run multiple tests at once, and perform pre-launch testing.

There is a specific reason for using a third party testing tool:

Working with app A/B testing software, you do not play with a live version of the page.

Instead, SplitMetrics creates web pages emulating the App Store where you can change and edit any element. Then you drive traffic to these pages to see what works and what doesn’t.

How to run an app A/B testing with SplitMetrics

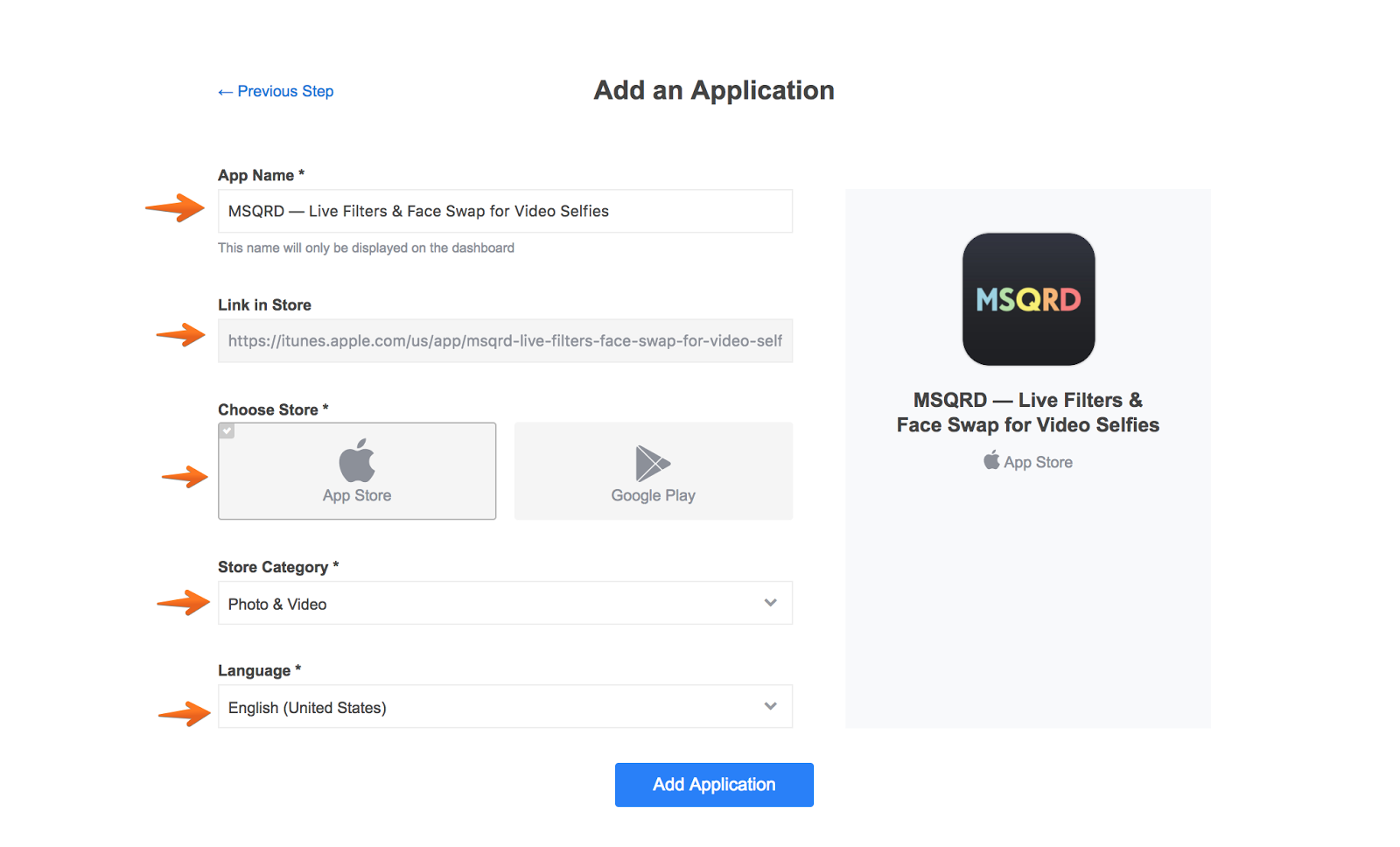

So, the next step is to set up an app A/B test. When you first create a test, log into SplitMetrics and click “Add New App” button.

Next, you are going to connect an app by entering its store URL or choosing it manually. Once done, the info will be pulled from the store automatically. Review it and click “Add Application”.

From this step, an app is connected and is ready for experiments.

Remember the A/B testing workflow? Running a test is the third step on it – you need to do your research and prepare variants first.

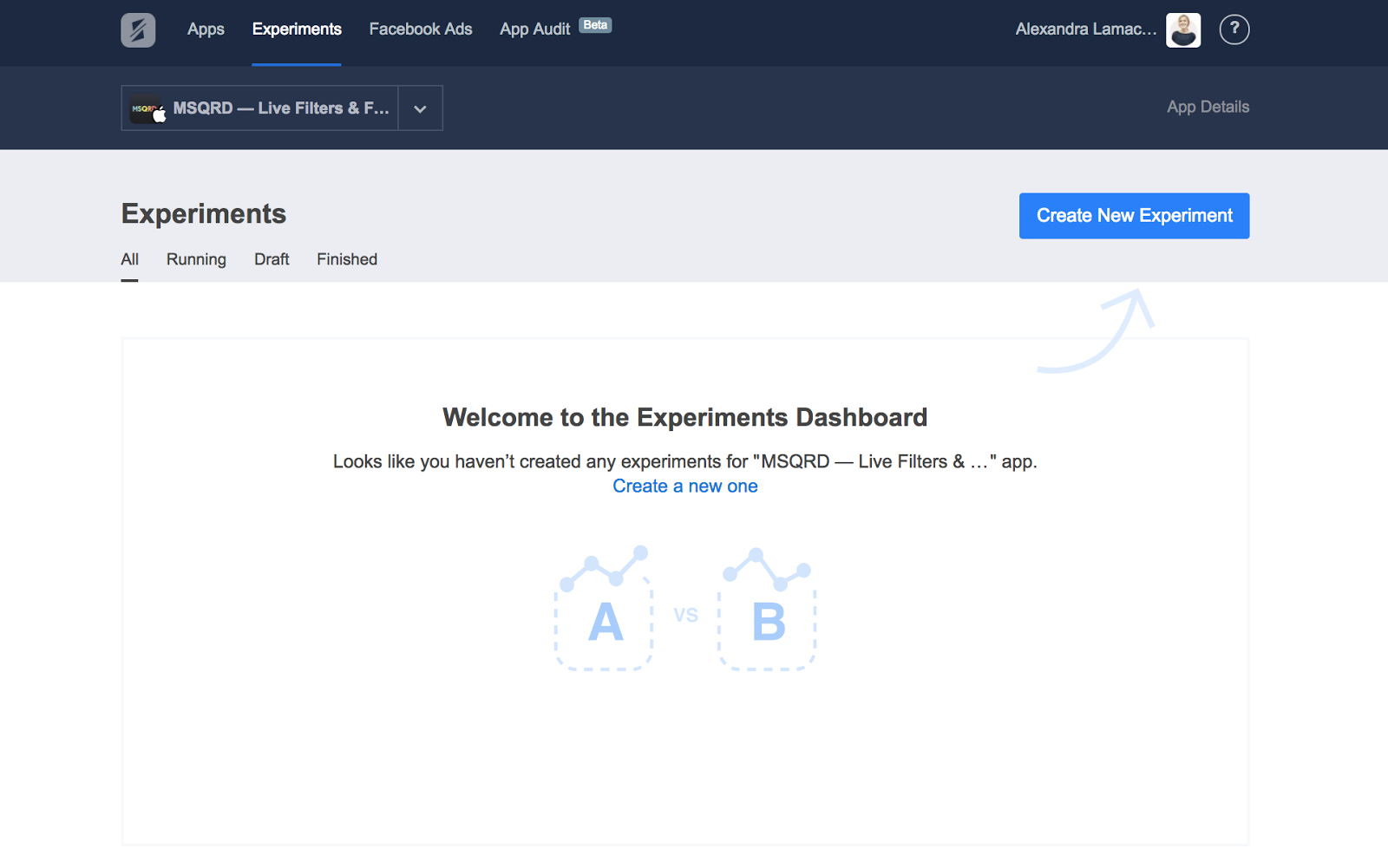

Once the first two stages are completed, you continue with the coolest part by clicking “Create New Experiment” button.

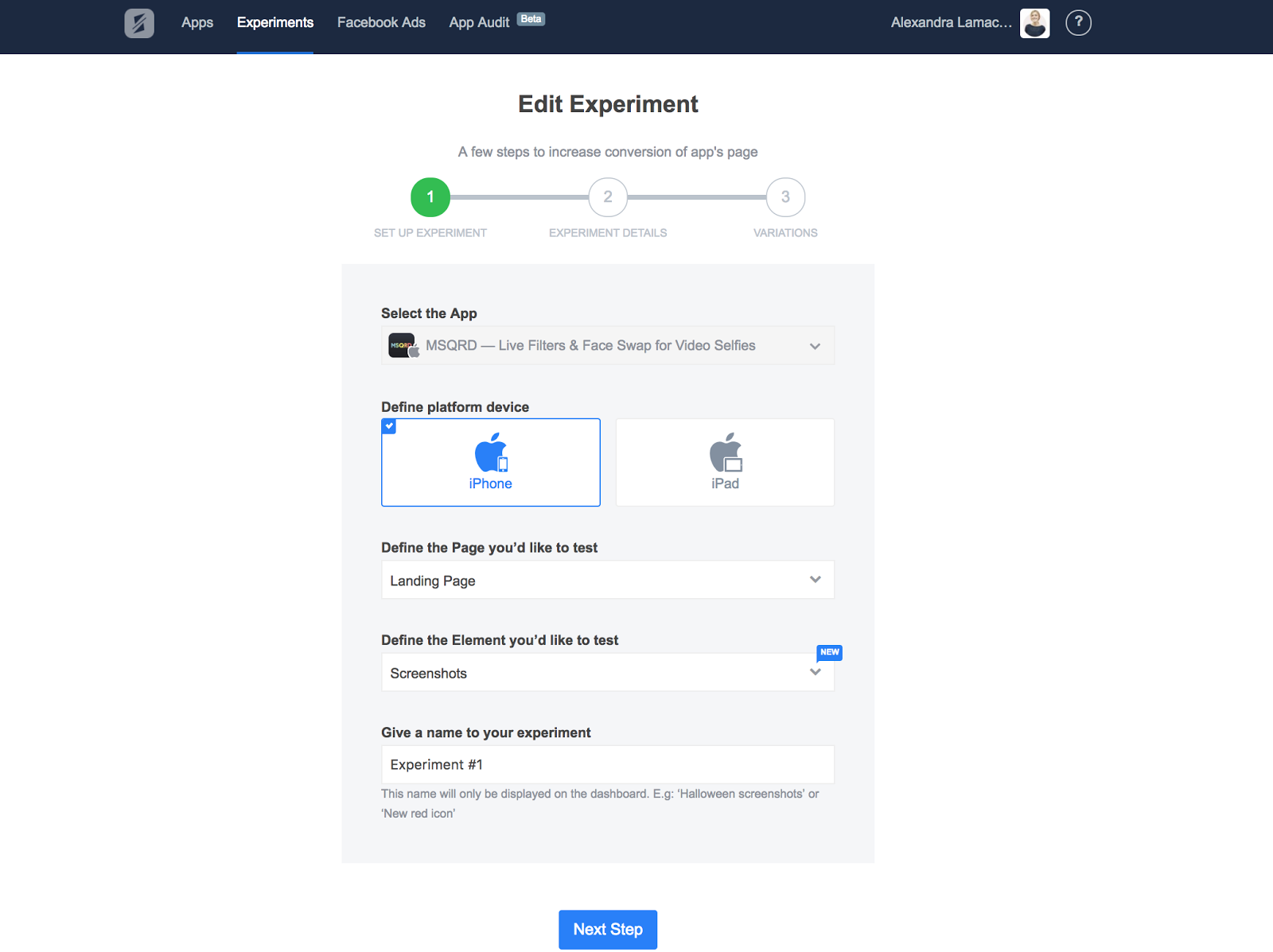

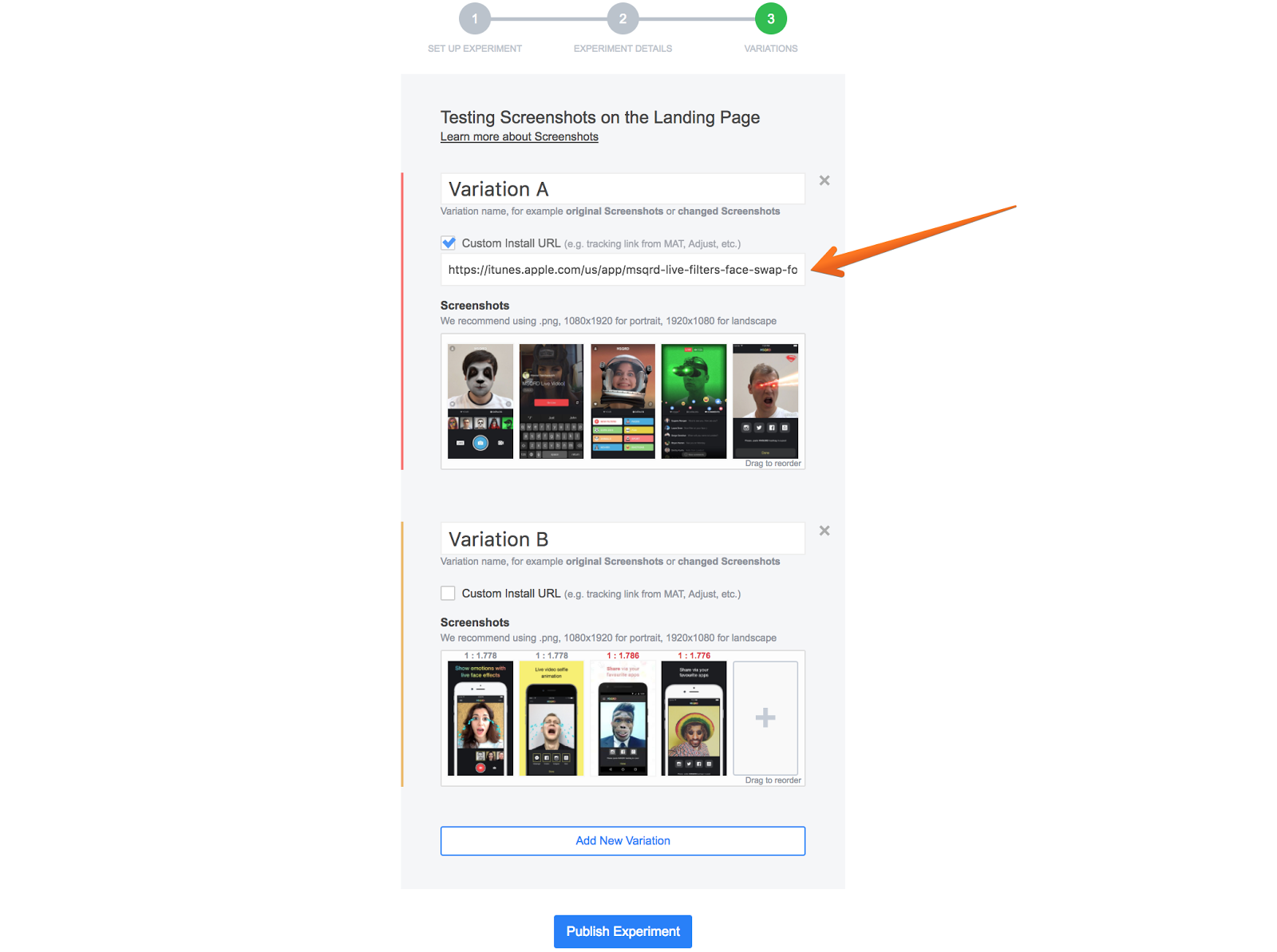

In the pop-up window you choose the device you want to test, a page (search, app page, category, search ads), and an element: screenshots, description, title, icon, video, etc. In this experiment, we are going to test screenshots.

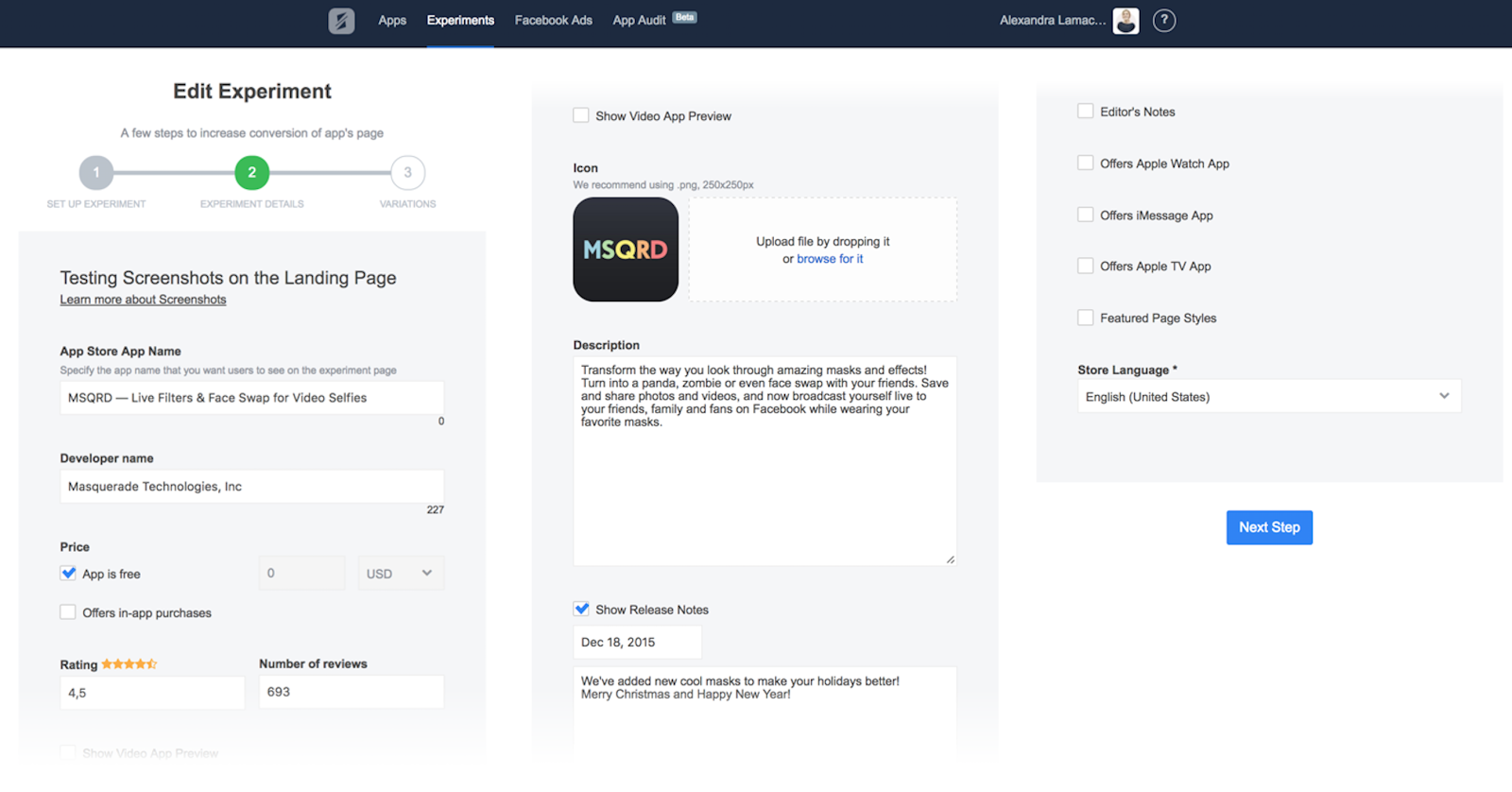

Now we’re at step five, where you need to set up the experiment details. This information is automatically pulled from the connected app page.

Although every single element on the page can be changed, I recommend you to stay focused on your initial strategy while updating elements for a test. Otherwise, an extemporaneous change can influence an app conversion rate and kill the whole experiment.

Having said that, this is a powerful tool to evaluate the importance of such app page elements as reviews and rating – the things you cannot influence in a direct way but can add to your marketing objectives.

At step six we run an experiment with screenshots and we’ll add our variations.

It’s important to keep your goals in mind. So before publishing an experiment, you may want to add a custom install URL – for example, one from Adjust.

It’s great to get more installs from the app store page but what can matter even more is whether your new creatives actually accurately represent your product. You can understand it by adding tracking links from app analytics services and measuring in-app engagement and retention metrics alongside with the app conversion rate and other external interactions.

The experiment is now ready.

Finally, for the last step of the process, you will drive traffic to the experiment link that SplitMetrics has generated for you. The service automatically splits people who click the link and steers them to the different variations.

It is crucial that the traffic channel you’re going to use for the experiment has to be sent to a relevant and homogeneous audience. Therefore, perfect tools for this aim would be:

- Facebook ads campaigns

- Adwords campaigns

- Website banners which location remains unchanged over the whole testing period

After enough traffic has been sent to the variations, you can analyze results.

Depending on your goals and the results you’re looking for, you are going to evaluate such metrics as:

- CTR

- App conversion rate

- Engagement rate

- Time spent on a page before a click to install

- Average time on a page, etc.

Delving deeper into analytics, you can also use hit and scroll maps as x-ray glasses to see how people behave on a page and what elements actually influence their decision to install the app.

All together these metrics help you to select a winning variation you can implement to the live app page and continue with follow-up tests.

If the new version has shown little to no difference, then you move on to other experiments in your list or try to find a problem with the initial test (tip: it can be because of changes that were too minor, a low confidence level, low traffic or a wrong target audience).

App A/B testing case studies

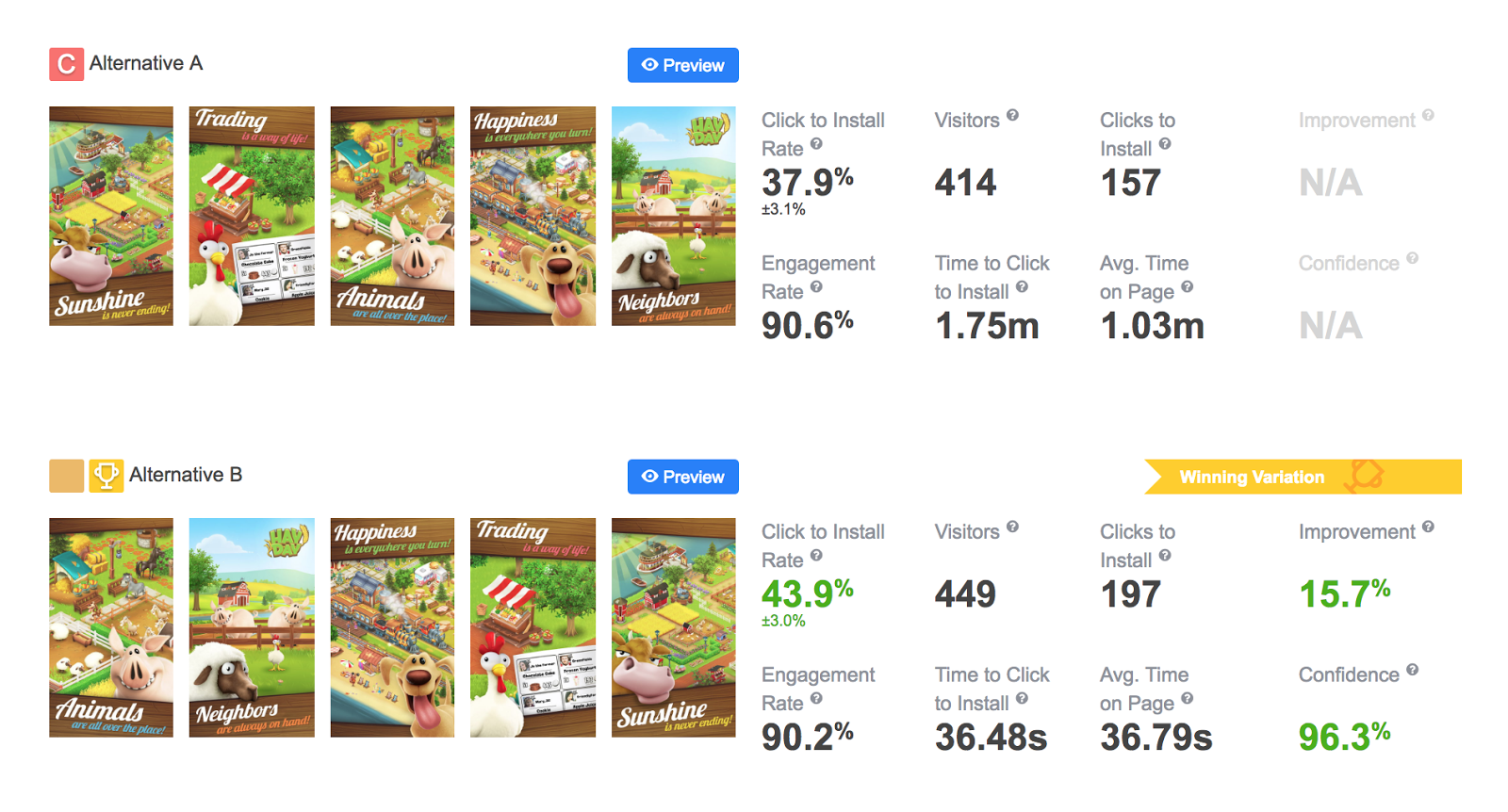

Let’s have a look at a few case studies to better understand the impact A/B testing can have.

Understanding the audience: Screenshots A/B testing gave Rovio 2.5M more installs

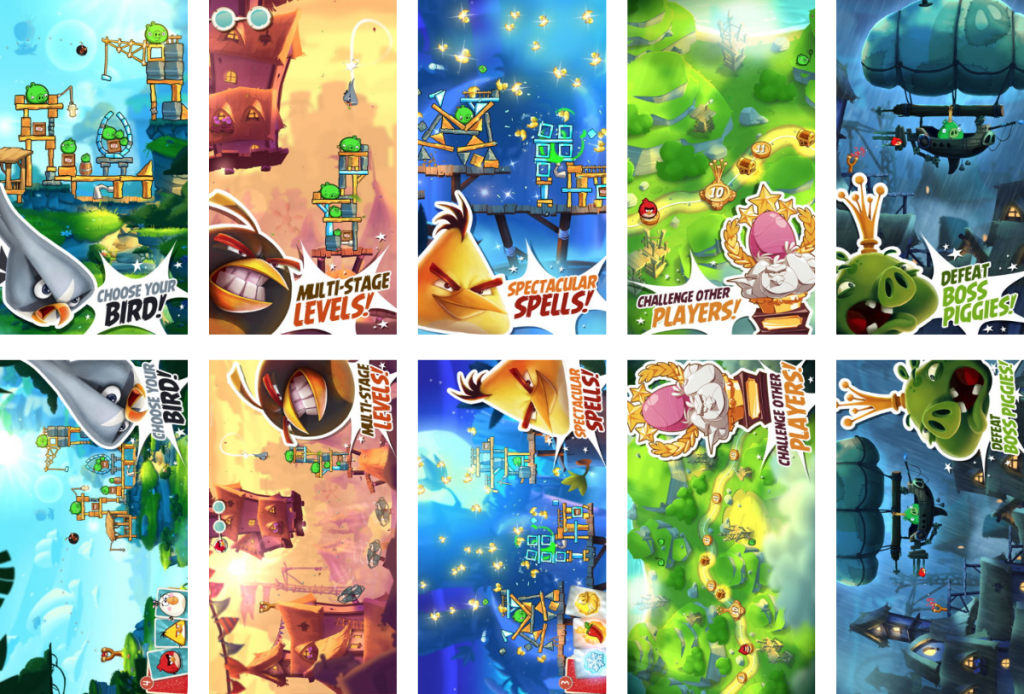

The assumption that Angry Birds 2 is played by people who have only one game on their device and usually hold their phones in portrait mode allowed Rovio to compare portrait and landscape screenshots performance. Portrait screenshots have shown 13% higher conversion which has turned into millions of new users.

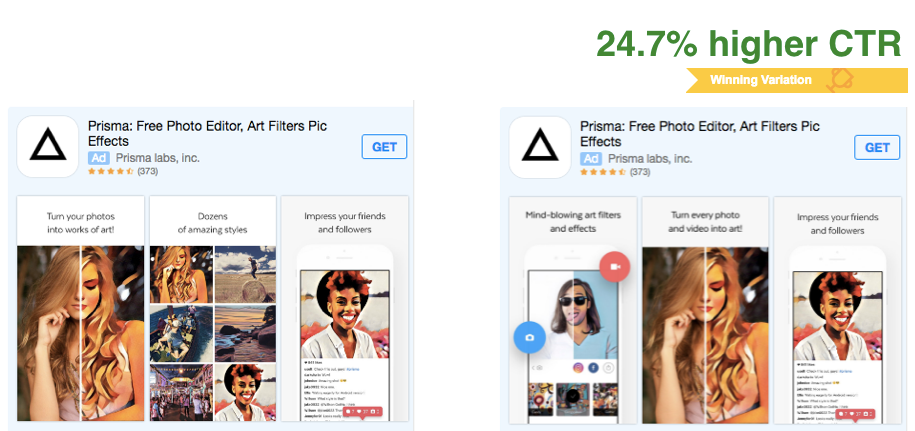

Complex testing: How Prisma achieved +24,7% conversion uplift

This is an example of the complex series of split-tests with a conversion uplift goal. This series of tests involved testing of screenshots, banners and a description. After the tests, Prisma has achieved a 24,7% increase in conversion which would have been impossible had they been confined to one test.

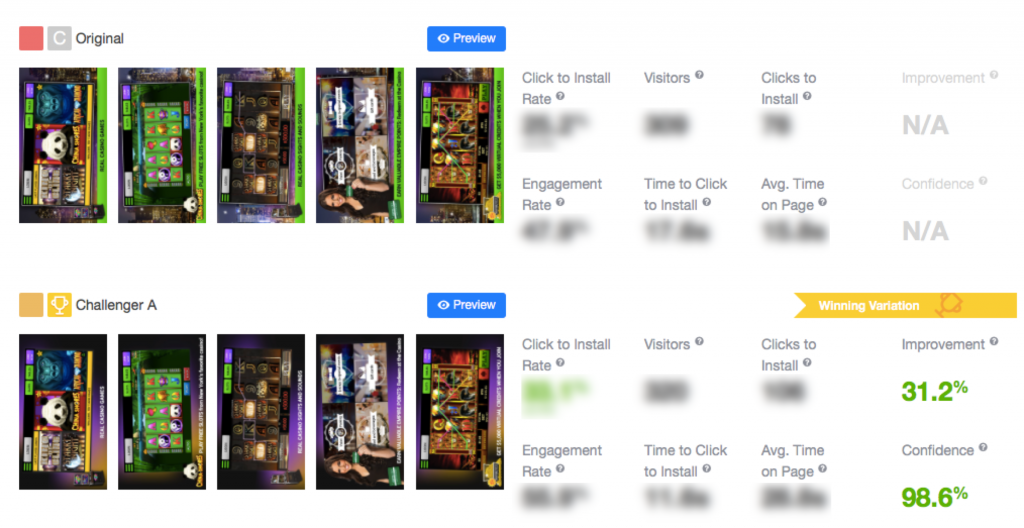

Empire City Casino: A single focus on screenshots can increase a conversion by 30%

A smart design change to the background image resulted in a 31% conversion uplift. Empire City Casino has switched the focus to features by simply blurring screenshot backgrounds.

To remember:

- Do not build up user acquisition channels until you are sure your app page converts.

- Elements that currently (more to come with iOS 11) influence an app conversion rate in search, search ads, app store page and category are: title, icon, screenshots, description, price, rating and reviews, in-app purchases and video.

- So that your tests generate results, you should start with a mature hypothesis based on customer surveys and competitor research.

- To identify elements for optimization, you should look at your marketing strategy and key user acquisition channels.

- Finding elements that stunt your conversion rate and user growth is a key to a successful experiment.

- A/B testing is an ongoing process that should include a successive series of tests. Algorithm changes and iOS updates often challenge assumptions.

- Traffic channels you’re going to use for app A/B testing should be sent to a relevant and homogeneous audience.

- There is always room for improvement!

About the author

Alexandra Lamachenka is Head of Marketing at SplitMetrics, an app A/B testing tool trusted by Rovio, MSQRD, Prisma and Zeptolab. Alexandra shows mobile app development companies how to hack app store optimization by running A/B experiments and analyzing results.

- Engage, Retain, Earn: Growth Strategy for Game Apps [Based on Data] - 15 September 2022

- How to do App Store Optimization step-by-step: a full cycle of ASO in the App Store and Google Play - 9 August 2022

- The Importance of A/B Testing - 13 January 2022

Hey,

Good to see your article!!

I really appreciate your effort of sharing information with us

I couldn’t agree more with what you said – if we want to successfully test an app, we should perform successive series of tests in order to find out if there is any room for improvement left.

Hello Alexandra,

I really appreciate you for posting such an amazing & very comprehensive detail on APP A/B testing. Actually, I have also 2 apps, but I didn’t aware of proper A/B testing, but now I can implement according to your guidance. Thanks for help.