The best ways to test and optimize your Facebook ad creatives

Facebook Ads

November 29, 2018

We hear (and talk) a lot about split testing.

You’re probably already running A/B tests on the Play Store (if not, you should) as part of your App Store Optimization strategy.

But how do you find the mobile ads that perform the best?

It’s one of several Facebook app install ads best practices to split test your ad creatives or at least compare them so you can improve your paid user acquisition.

There are a few different ways you can do this. They have their own advantages and drawbacks, and each a few pitfalls to look out for.

In this post we detail different methods and share insights from 3 industry experts in User Acquisition for apps: Thomas Petit (8fit – a must-follow on twitter), Shamanth Rao (FreshPlanet – has a great podcast with How Things Grow and also a pleasure to work with as a client) and Andrey Filatov (Wooga – with whom it’s been great to work on several videos).

Whether you’re running Facebook app install ads campaigns or app event optimization campaigns, this article should help you assess and eventually optimize your creatives better.

Why compare the performance of app install ad creatives?

The idea is of course to test different versions of your Facebook ads so you can see what performs best and optimize future campaigns.

You can follow all the best practices and guides in the world, but some optimizations are specific to your case. This is true for other kind of optimizations related to your audience or bidding, but here we focus just on the creatives part.

Nobody has the “secret sauce”. And there are some insights that only you can get.

By finding what works best, you can do more of it.

Note: this is something you want to do for all your mobile app install ads, but we focus here on Facebook and Instagram ads.

What to test?

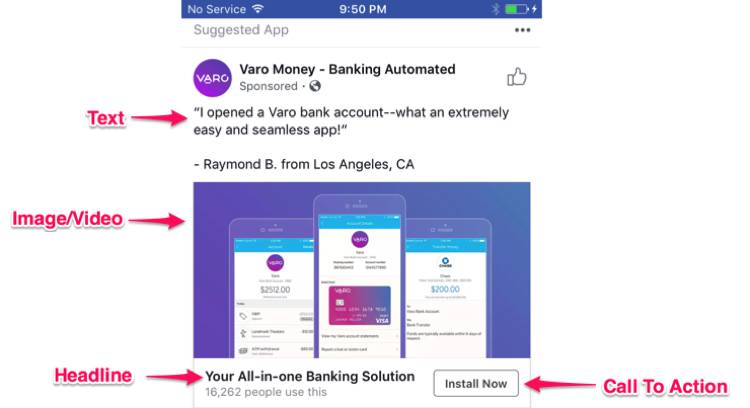

Regardless of the method(s) you’re using, here are some examples of things you can test for your app installs or app event optimization campaigns when it comes to your ad creatives:

- A single image ad vs. a single video ad;

- A single video ad vs. another single video ad;

- A single square video ad vs. the same video ad but in portrait;

- A single video ad vs. a carousel ad with two videos;

- An ad with one headline vs. an ad with a different headline;

- An ad with an Install Now call to action vs. an ad with a Download call to action.

When brainstorming your tests, form a hypothesis that you can use to get insights when analyzing the results.

A great way to find new creative concepts to test for your Facebook ads is to do look at your competitors’ app install ad creatives.

Example: “messaging that highlights social aspects will outperform messaging that highlights competition”.

Method 1 – Several Facebook ads in one ad set (manual way)

How it works

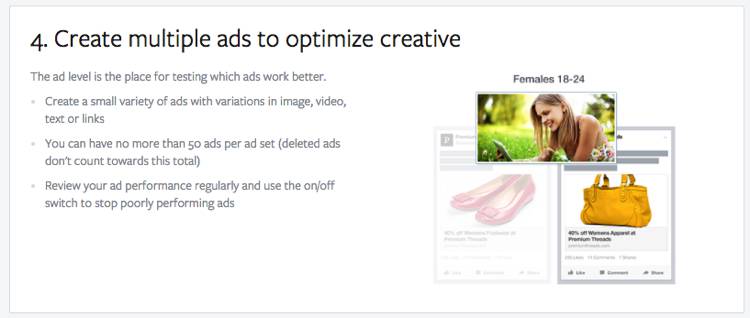

With this first technique you simply create multiple ads (different creatives) in one ad set.

This is not split testing because you are not displaying ads evenly (and randomly) to the same audience, but you trust (not too much, keep reading) that Facebook will serve the best performing ads.

“You won’t have an equal distribution of traffic across all variants, but you get a good enough read of which ad is better.”

– Shamanth Rao, VP Growth & UA at FreshPlanet

Drawbacks of multiple Facebook ads in one ad set

What happens when you do this method is that Facebook is going to optimize based on the highest CTR.

This means that sometimes Facebook will serve an app install ad (considered the best-performing ad) much more, even though it has a higher CPI than other better converting ads (i.e they have a better install rate but lower CTR).

“If you are doing a small soft-launch running a set of 4 different creatives as a part of an ad set may be the most efficient choice. Facebook itself does the media spend allocation based on the best performing creatives — giving much more impressions to the creatives with the highest CTR.”

– Andrey Filatov, Wooga

Advices to optimize ads with this method

Turn off the ads with lower ROI

You want to monitor your ads closely.

If an ad with high CTR is served more even though the overall performance is not as good as the other, then pause it. This leads to Facebook also serving the rest of the ads so you can learn about what happens further down the funnel for these too.

Thomas shared with us that Facebook doesn’t wait for results to be completely significant, and many ads stop getting served after very few impressions, that’s why pausing even the good performing ones can make sense.

“I look at the “impression to install ratio” and pause some ads for which I have enough data to “force” delivery of other ads with lower CTR to be able to learn about what happens in the rest of the funnel for these.”

– Thomas Petit, 8fit

By looking at the “impression to install ratio” you get a combination of the CTR and CR (conversion rate) that can help you figure out which ads to turn off.

In short: don’t let Facebook run everything and don’t look just at the CTR of your Facebook ads. But don’t look just at the CPI/CPA either!

Keep the number of ads reasonable

Do not run too many creatives in the same ad set: 2-3 is recommended.

You can go a bit above (4-5) but don’t go crazy because it just make things harder to analyze, especially if you do not change just one thing in your ads.

Change only one thing (or keep it straightforward/organized)

Try to change only one thing (headline, text, image/video) so as to facilitate the analysis.

If what you’re trying to find out is which ad creative works better, then you want to change only that part for this ad set. This means that you keep the same:

- Headline

- Text

- CTA

That way you can draw easier conclusions on the creatives from the Facebook reports.

One thing that Thomas Petit sometimes does is also to run multivariant tests, ending up with something like the following ads in his ad set:

- textA+videoA

- textB+videoA

- textA+videoB

- textB+videoB

Method 2 – Facebook split testing

Facebook split testing feature has been around for a while, but the Facebook creative split test simplifies a lot the process of optimizing your Facebook ad creatives. Even if it’s not perfect.

How Facebook split testing works

When split testing, you create multiple Facebook ad sets. Each ad set you create has one difference: a variable.

Facebook divides the audience you’re targeting into random groups that do not overlap. It duplicates your ads and tests the ad sets against each other by only changing that one variable.

Each ad set’s performance is measured based on your campaign objective (we focus here on app installs or specific conversions within your app) so you can know which one wins.

The variables you can split test

For each test, you need to select which one variable is going to change between your ad sets.

You have the following choice:

- Target audience – so you can find who is more likely to engage with your ad, install your app and use it;

- Delivery optimization – so you can find which type of delivery optimization (for example which app event you select for optimization) gives the best results

- Placements – so you can find where (Facebook mobile feed, Instagram feed, etc.) a specific ad is performing the best;

- Creative – so you can know which images, videos, text, headlines or call-to-action work best with the audience you’re targeting.

Drawbacks of Facebook split testing

Facebook split testing is an interesting feature, but there’s no magic there either.

If you split test two bad audiences, two bad placements or two bad creatives then you won’t learn much.

There are also a couple of reasons split testing might not be the right fit for you:

- A/B testing with Facebook does require you to allocate budget to this, and it’s money that is not going towards your other ads;

- Because you split your audience in two, each ad set is more expensive than if you were running just one ad set with several ads;

- If you’re testing on a very small audience you might not get results that are statistically significant within 14 days.

If you’ve never run a Facebook app install ad campaign before, you should start simple and wait until you start getting good results before trying split testing.

“In my experience, split tests are helpful ONLY when you want to test an isolated variable(headline, CTA etc.) for a limited period of time(14 days). This makes its use case extremely limited right now.”

– Shamanth Rao, VP Growth & UA at FreshPlanet

“It looks ideal on paper but when using Facebook split testing you divide your audience size in 2 or more, so each adset costs more than if it was together. Plus, Facebook’s delivery is kinda random and incomprehensible so you have to fully trust Facebook on doing the delivery right. Great on theory, but tough in practice”

– Thomas Petit, 8fit

Advices for creative split testing on Facebook

One split test at once

Facebook takes care of this one: you can only test one variable at a time. This prevents you from testing two different creatives against two different audiences, which would be impossible to analyze.

Don’t split test, A/B test

Technically, for each variable you can create up to 5 Ad Sets (Facebook calls them Ad Set A, Ad Set B, etc.).

But no matter what you’re testing we recommend that you only test 2 versions.

This makes it easier to:

- Analyze the results;

- Reach the audience sizes (sample sizes) needed for statistical significance.

Define your sample size and give your test enough time

As for any A/B test, you want to define in advance how much time you’ll need to reach statistical significance.

Knowing your typical conversion rate when running Facebook ads, calculate your sample size (you can use this tool). Just like we explained in our post about A/B testing on the Play Store with listing experiments.

If the budget you’re looking to allocate to this split test does not allow to reach that sample size (i.e you don’t have enough money to get to the number of impressions needed) within Facebook’s 14-days limit then you’re taking the risk of not getting reliable results.

If you do have the budget to reach the sample size, run the tests during the needed period. Facebook will also send you an email when the results are known (treat carefully).

In the case where with your regular campaigns (no split test) you see differences in performance depending on the weekday, you should run the A/B test for at least 7 days so you can get the full picture.

Test only one creative element

As you can notice in the examples in the “What to test” section, not only we are “only” A/B testing we are also changing only one creative element.

This is because you want to know what you can attribute the results to. If you have different call to action, headlines and videos then you have a hard time finding out what made one ad set perform better.

Which means you can’t apply the knowledge to future campaigns!

So keep it simple.

Note: if you’re looking into testing a bunch of variations in ad creative elements, have a look at the dynamic creative optimization (DCO) section below.

Test against a high-performing ad

Ad fatigue is real and at one point you might need to replace what were once your best performing ads.

When split testing with Facebook you should therefore aim at finding the next best ad creatives that you’re able to use.

So you want to A/B test something that you know already works against something new.

If you come close, the ad is most likely worth using (now or when your best performing ads start declining).

“if you have big budgets, ongoing advertising, clear creative best-performers and you want to test new ideas and concepts, it’s reasonable to run a separate split test first and if it performs to add the new creative to the mix.”

– Andrey Filatov, Wooga

Test significant creative changes (different concepts)

It’s already tough to get A/B test sample sizes that lead to significant results, so you want to prioritize testing very different concepts for the one creative element you’re testing.

If you test a headline, do not change only one word. If you test an image background, choose something very different.

And if you test video, don’t just change one scene at the end. Here are a few things you should try instead:

- Testing live action video (with people) vs. animated video;

- Testing a video focused on the app UI vs. explaining the concept;

- Focusing on a different benefit or value proposition;

- Starting your video with a different value proposition;

- Testing a long form video vs. a really short video.

The possibilities are endless, so you of course have to define priorities based on your budget.

How to create your creative split test

Facebook does a great job at guiding you when you’re creating your split test, and here is the step-by-step explanation by Facebook.

Analyzing the results

You can find your results in Facebook Ads Manager, when it’s running (let it run until the sample size is reached!) and once it’s completed.

The winning ad set will be indicated in the reporting table and you can get more info you can open the reporting panel.

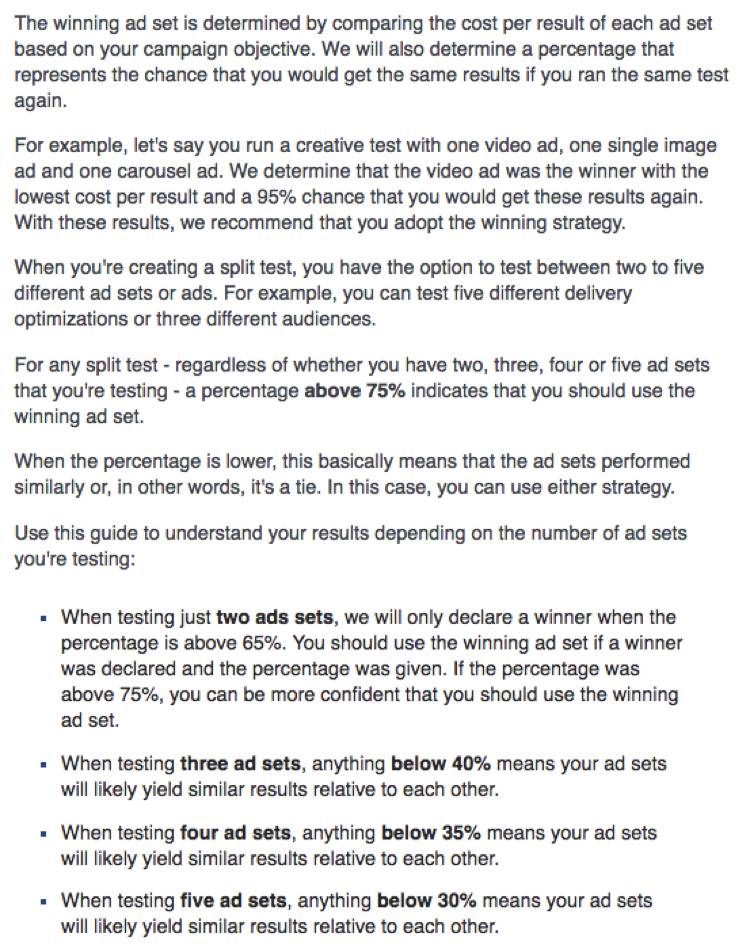

To determine the winning ad set, Facebook compares the cost per result of each ad set based on your campaign objective (install volumes or app event).

It also determines a percentage of chances (the confidence interval) that corresponds to the chance of getting the same results if you were to run that same test again.

And here you need to be quite careful, as Facebook is not really cautious in what they advise.

If you’ve read other posts we’ve written on A/B testing, you’ll notice that the thresholds Facebook uses to determine the winner of a split test are quite low.

Our advice is to stick with the first method they suggest: when the percentage of chances that you would get the results again (statistical significance) is above 95% (or 90% at the lowest).

Anything else and you’re taking a bigger risk.

Note: as you can see, the more ad sets you test the harder it is to sort the best performing Facebook ads. Hence our advice to stick to only 2 ad sets.

Method 3 – Dynamic creative optimization (DCO)

How Facebook’s DCO works

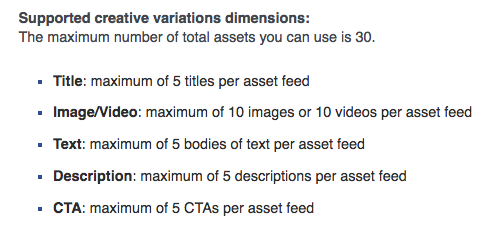

Dynamic Creative is a tool in the Ads manager that automatically delivers the best combinations of your creative assets by using machine learning.

It is not a testing tool per say, as the idea is not only to find the best combination for a niche audience but rather to select a broad audience so Facebook automatically determines which any given combination works best with any given audience and placement.

In short the promise is: the right ad, to the right people, at the right place, at the right moment.

It takes the different components of the ad (images, videos, titles, descriptions, CTAs) and runs different combinations of these assets across audiences.

It’s a similar concept to what Google does with Universal App Campaigns except you can target the audiences better.

App installs and conversions (optimization for app events) are supported, and you can have both single image and single video ads.

Unlike split testing, DCO campaigns with Facebook let you test multiple variables and does not have a time constraint.

How to set up dynamic creative ads

Here is the step-by-step explanation by Facebook.

Analyzing the results of dynamic creative optimization

You’re only creating one ad with DCO: the ad that uses the best combination of assets.

But so you can understand what’s happening you might want to figure out which type videos, images, headlines, etc. perform the best.

That’s something you can see in your ad reports. Click on the “Breakdown” button and choose by which asset you want to see performance.

Be careful when trying to gain insights for other “regular” campaigns: using the best performing video, with the best performing headline, with the best performing CTA and so on will not necessarily lead to the best performing ad depending on the audience you’ll use.

Advices when running DCO ads

Budget: use your current bidding practice

Even though Facebook is going to create a lot of different combinations, you should bid as usual. Part of the budget goes to testing to find the right combination for the right audience, and overbidding would result in spending more on testing.

Use broad targeting

Avoid really niche interests for these kind of ads: Facebook’s machine learning needs a big enough audience to work with.

Of course if you’re regular targeting is already broad then you can use that!

Make sure assets uploaded will make sense together

This is very similar to the best practice for Universal App Campaigns creatives.

Because Facebook is going to mix and match your assets, you need to make sure that any combination created makes sense.

So do not use a headline that would not go with a specific video, or a CTA that does not make sense with your image.

Keep an eye on performance and exclude/replace assets

When looking at the performance breakdown, you’ll most likely see an ad asset (example: a video) that is getting a lot of impressions, which of course results in more spend. This is because the CTR for this asset is higher than the CTR for other similar assets (other videos).

However you should not let Facebook freely run this for you, as an asset that has a higher CTR might also lead to the most expensive CPI/CPA. In this case you want to exclude this ad asset from the future creative mix.

So just like the advice Thomas gives for Method 1, look at the full picture. For app install ads, the impression to install ratio is a great metric to observe.

“Monitor the performance closely. The best performing creative will take the majority of the views but may not be the best investment (and ROI) for you in the end.”

– Andrey Filatov, Wooga

Conclusion

Whether or not you should optimize your Facebook ad creatives is not the question.

Just like you want to target the right audience, you want your ads to perform once they’re seen by potential users.

And to do this you need to try different creatives for your app install ads and AEO campaigns. You now have 3 different methods to do this:

- Creating multiple ads in one ad set, where Facebook will serve the ads that perform best;

- Using Facebook split testing feature, which is going to split your audience in 2 and show a different ad set to each part (with only the ad creative changing);

- Using Facebook DCO campaigns, where Facebook will create a different ad for different people in your audience by combining assets you provide.

There’s no perfect solution, and the general rule here is: monitor your ads closely so you can adapt by turning off assets or ads that are not performing well even though Facebook serves them a lot.

Do you have any additional tips for one of these 3 methods? Let us know in the comments!

Excellent post. Thanks a lot for sharing it.

great insight. thanks for the post.